Testing Software Requirements: Essential Skills for Success

Learn how to effectively test software requirements and ensure quality. Discover key techniques to excel in testing software requirements today!

Think of testing software requirements as the very first line of defense in any project. It's the process of kicking the tires on your project's blueprint—the documented requirements—to make sure they’re clear, complete, consistent, and actually testable before a single line of code gets written.

This isn't just about ticking a box. It's a critical quality check that stops expensive rework in its tracks, gets everyone on the same page, and ensures the final product solves the right problem. In short, it’s your best weapon against scope creep and project failure.

Why Testing Requirements Is Your First Big Win

Imagine an architect's blueprint that just says "build a strong house" or "put in some nice windows." You’d end up with a mess of conflicting ideas, last-minute changes, and a final product that no one is happy with. Software development works the exact same way. Bad requirements are the number one cause of project headaches, leading to features that miss the mark, frustrated teams, and budgets that go up in smoke.

This is why testing software requirements isn't just a preliminary task; it's your first major victory. It flips quality assurance from a last-minute bug hunt into a proactive strategy session, making sure what you build is exactly what you need.

The Real Cost of Skipping This Step

When you jump straight into development without vetting your requirements, you’re inviting trouble. If a requirement is vague or missing key details, developers have no choice but to guess. And those guesses often don't line up with what the stakeholders actually wanted, creating a domino effect of costly problems.

The fallout is almost always the same:

- Painful Rework: Fixing a bug in the code is one thing. Fixing a fundamental flaw in the requirements after the code is written is exponentially more expensive. It means more than just rewriting code; it's re-testing, re-deploying, and re-documenting everything.

- Uncontrolled Scope Creep: Unclear requirements are a breeding ground for new features and unexpected "clarifications" that derail your timeline and bloat the budget.

- Team Friction: Without a single source of truth, you'll see friction build between business analysts, developers, QA, and stakeholders. Everyone ends up working from their own interpretation.

- Total Project Failure: In the worst-case scenario, the finished product simply doesn’t solve the core business problem, making the entire investment a waste.

The Strategic Edge of Getting It Right Early

On the flip side, spending time validating requirements upfront has a powerful ripple effect that benefits the entire project. It's a strategic investment that pays for itself long before you start coding. When you get the requirements right from day one, you create a shared vision that acts as a north star for every decision that follows.

A well-tested requirement isn't just a clear instruction; it's a contract. It’s an agreement between everyone involved that cements the business vision, the technical plan, and the user's needs into one cohesive goal.

This early alignment is why the industry pours so much money into quality assurance. The global software testing market is on track to blow past USD 45 billion by 2025. Some companies even dedicate up to 40% of their entire development budget to testing, because they know it's a massive business advantage. You can find more stats and trends on the software testing industry to see just how central it has become.

Before diving into the "how," let's establish a baseline. Every single requirement, no matter how small, should be checked against a core set of quality attributes. This simple checklist is your first filter for catching common problems.

Core Requirements Validation Checklist

| Attribute | Description | Why It Matters |

|---|---|---|

| Complete | Does the requirement contain all necessary information for design and development? No missing pieces? | Prevents developers from having to make assumptions that could lead to incorrect implementation. |

| Correct | Does it accurately state a business or user need? Is the information factually right? | Ensures you're building the right feature to solve the right problem. |

| Clear | Is the requirement written in simple, unambiguous language? Can it only be interpreted one way? | Avoids confusion and misinterpretation between stakeholders, developers, and testers. |

| Consistent | Does it contradict any other requirement? Does it align with the overall project goals? | Prevents logical conflicts in the system that cause bugs and design dead-ends. |

| Testable | Can you define clear pass/fail criteria for this requirement? Is it verifiable? | If you can't test it, you can't prove it's been implemented correctly. |

| Feasible | Can this requirement be realistically implemented with the available tech, budget, and time? | Stops you from committing to features that are impossible or impractical to build. |

| Necessary | Is this requirement truly needed to meet the business objective? Does it add real value? | Helps you avoid "gold plating" and scope creep by focusing only on what's essential. |

Think of this table as your go-to reference. Running every requirement through this filter will dramatically improve the quality of your project's foundation and make the rest of the process much smoother.

Proven Techniques for Validating Requirements

Once you've got a set of requirements that look good on paper, it's time to see how they hold up under a little pressure. This is where you bring in real human insight to move beyond theory and actively hunt for weaknesses, fuzzy language, and unspoken assumptions. The best way to test software requirements involves collaboration—getting different perspectives in the same room.

These methods aren't just about catching mistakes. They're about building a shared understanding from the very start. When a developer, a designer, and a business analyst all look at the same requirement, they'll inevitably spot gaps that one person working alone would have missed entirely.

Facilitating Structured Walkthroughs and Reviews

One of the most powerful tools in your arsenal is the structured walkthrough. This isn’t just another meeting; it's a focused session where the person who wrote the requirements guides everyone else through the document, one feature at a time. The whole point is to get everyone on the same page and catch misunderstandings early.

To make a walkthrough truly effective, you need a little structure:

- Assign clear roles: You need a moderator to keep things on track, a scribe to capture every piece of feedback, and reviewers who show up prepared with questions.

- Focus on clarity, not solutions: The goal here is to find requirements that are confusing or incomplete. Avoid the temptation to start redesigning the system on the spot—save those discussions for a separate meeting.

- Bring in a diverse crew: Make sure you have both technical folks (developers, QA) and non-technical stakeholders (business users, marketing) in the room. This well-rounded perspective is crucial.

A requirement walkthrough isn't a presentation; it's a collaborative investigation. Its success hinges on creating a safe environment where every participant feels comfortable asking "dumb" questions that often uncover critical misunderstandings.

This process is a cornerstone of most software quality assurance processes, baking quality in right from the beginning.

Using Prototypes to Make Requirements Tangible

Words on a page can only take you so far. Sometimes, the best way to test a requirement is to show people what it will actually feel like to use the software. This is where prototyping and wireframing shine, turning abstract concepts into something concrete that users can see and interact with.

Even a simple, low-fidelity wireframe can immediately highlight a confusing workflow or a major usability issue that was completely hidden in a text document. For example, a requirement might say, "The user must be able to easily find their order history." A quick prototype could reveal that the "Order History" link is buried three clicks deep—hardly an "easy" experience.

This visual feedback loop is priceless for a few reasons:

- It exposes the gap between what stakeholders say they want and what they actually need when they see it in action.

- It allows for quick and cheap iterations before a single line of code is written.

- It gives non-technical stakeholders an easy way to provide meaningful feedback on the user experience.

By combining structured discussions with visual prototypes, you create a powerful system for testing your requirements. You’ll catch problems when they’re easy to fix and ensure the final product is something people will actually want to use.

How to Write Genuinely Testable Requirements

Here's a hard truth I've learned over the years: if you can't test a requirement, it's not a requirement. It's just a suggestion.

The whole point of writing testable requirements is to rip out any ambiguity and bake verification right into the project's DNA. This simple shift moves your team from guessing games and subjective debates to a world of objective, provable outcomes.

The secret sauce is swapping vague, wishy-washy language for specific, measurable statements. A tiny change in how you phrase something can be the difference between a feature that everyone loves and one that causes endless headaches and rework.

From Vague to Verifiable

Let's get practical. The core skill in testing software requirements is turning foggy statements into crystal-clear instructions that leave zero room for interpretation. It’s a game of specifics.

Here’s what not to do (The Vague Version):

- "The user registration process should be fast."

- "The system should handle a large number of users."

- "The user interface should be intuitive."

These are impossible to test. What on earth does "fast" or "large" or "intuitive" actually mean? Your idea of fast is probably very different from your lead engineer's, which is different again from your end user's.

And here's how to fix it (The Testable Version):

- "User registration, from hitting 'submit' to receiving the confirmation email, must complete in under 3 seconds."

- "The system must support 500 concurrent users logging in within a 1-minute window without any performance drop."

- "A new user must be able to successfully create a new project in fewer than 5 clicks without needing the help docs."

See what happened there? Each revised requirement is now a clear, measurable target. A test can either pass or fail. No gray area.

Defining Airtight Acceptance Criteria

Okay, so making requirements measurable is step one. But you also need to define airtight acceptance criteria. Think of these as the specific, non-negotiable conditions a feature must meet before anyone can call it "done."

A great way to frame these is using the "Given-When-Then" format, which is a lifesaver for getting developers and testers on the same page. If you want to dive deeper into this structure, our guide on writing user stories is a fantastic resource.

A requirement without acceptance criteria is like a destination without a map. You might get there eventually, but you'll probably get lost along the way.

This level of precision isn't just a "nice-to-have" anymore. With software becoming more complex by the day, it's essential. The global software testing market shot past USD 54.68 billion in 2023, and it’s on track to hit nearly USD 100 billion by 2035. That explosion is driven by one thing: an intense, industry-wide focus on provable quality.

Ensuring Total Coverage with a Traceability Matrix

So, how do you make sure nothing falls through the cracks? You use a Requirements Traceability Matrix (RTM).

Don't let the fancy name fool you. It's usually just a simple spreadsheet, but it's incredibly powerful. An RTM links every single requirement directly to its corresponding test cases, creating a clear map from the initial business need all the way through to final verification.

With an RTM, you get a bird's-eye view of your test coverage and can answer critical questions instantly:

- Which requirements actually have test cases written for them?

- Which test cases are validating this specific requirement?

- If a requirement changes, which tests do we need to update?

This simple document is your safety net. It’s what ensures every single piece of functionality gets the attention it deserves.

Weaving Requirements Testing into Your Workflow

In the old days of software development, testing was often the final hurdle—a last-minute check before pushing the big red "launch" button. But that approach just doesn't fly anymore. To build great software today, you have to treat requirements testing as a continuous activity, not a one-time event. It needs to be part of your team's DNA, woven directly into how you work, especially in Agile and DevOps environments.

This is what people mean when they talk about the "shift-left" mindset. The concept is pretty simple: catch problems as early as humanly possible. Instead of waiting for the QA team to flag an issue weeks after it was coded, you spot the ambiguity during a planning session. This proactive approach saves an incredible amount of time and headache by preventing misunderstandings from ever turning into buggy code.

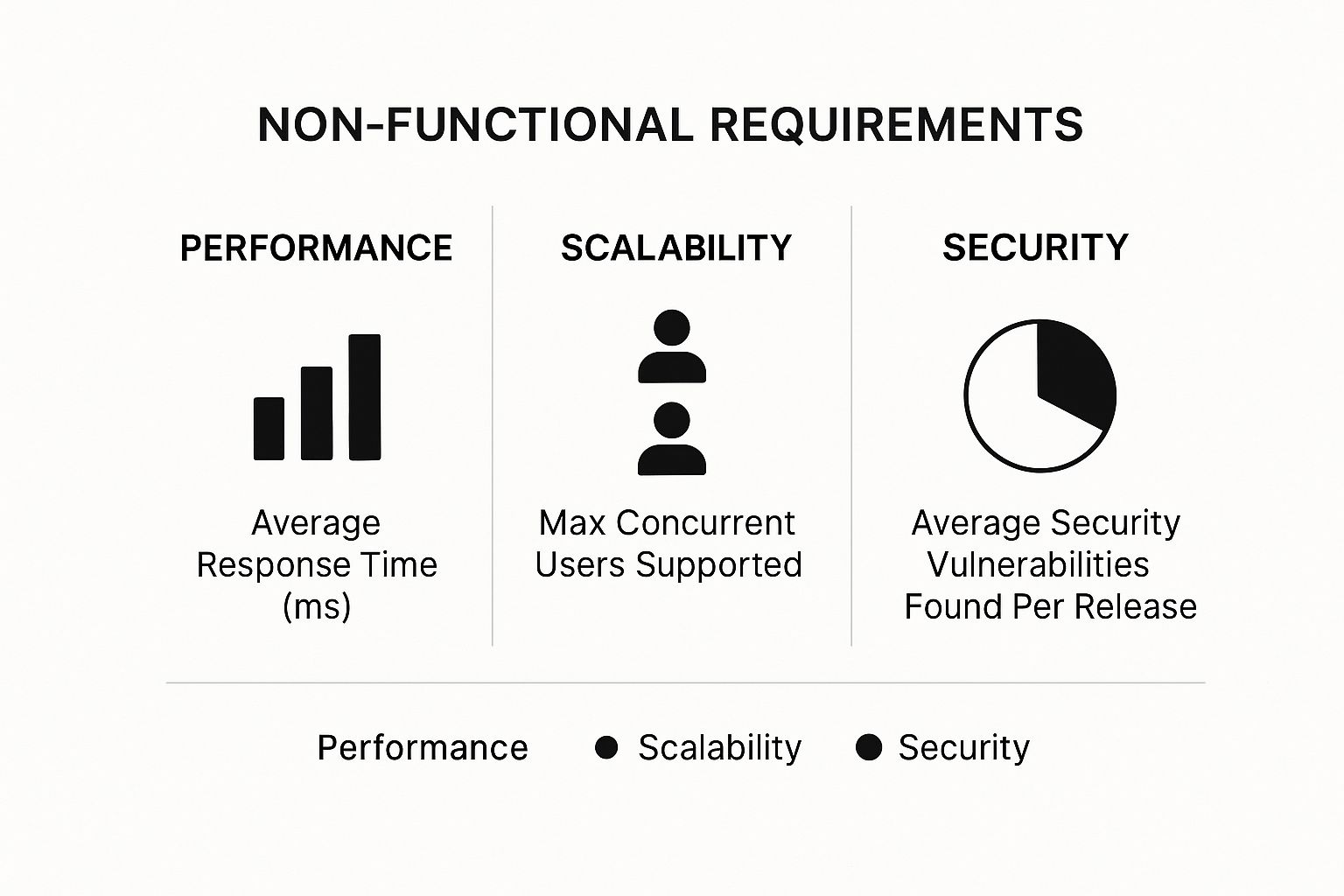

This is especially true for non-functional requirements. Things like performance, security, and scalability can't be bolted on at the end. They have to be considered from the start.

Tracking these metrics gives you a solid baseline for success. It helps turn vague goals like "the app should be fast" into concrete, testable targets that everyone can agree on.

The Old Way vs. The New Way

To really see the difference, it helps to compare how requirements are handled in traditional Waterfall projects versus modern Agile ones. The contrast is stark.

Here’s a quick breakdown of how requirements testing looks in Waterfall vs. Agile:

| Aspect | Waterfall Approach | Agile Approach |

|---|---|---|

| Timing | A distinct phase at the end of development. | Continuous, integrated into every sprint. |

| Ownership | Primarily the QA team or a dedicated testing group. | A shared responsibility for the entire team. |

| Process | Formal sign-off on a large requirements document. | Informal, collaborative validation during grooming and planning. |

| Feedback Loop | Very long; issues are found weeks or months after coding. | Extremely short; feedback is immediate, often within the same day. |

In short, Agile turns testing from a gatekeeper into a partner in the development process, which is a much healthier and more effective way to build software.

Make Validation a Team Sport

In a healthy Agile team, validating requirements isn't just the product owner's job. It’s everyone’s responsibility, and it happens naturally during your regular ceremonies.

- Backlog Grooming: This is your best shot. As you go through user stories, the entire team—devs, QA, designers, everyone—should be poking holes in them. Is this clear enough to build? Can we actually test this? Does "simple" mean the same thing to the designer as it does to the backend engineer?

- Sprint Planning: Just before you commit to a story for the upcoming sprint, give it one last look. Make sure everyone is crystal clear on the acceptance criteria and that any dependencies are sorted out. This final check can prevent so many mid-sprint "uh-oh" moments.

When you make this collaborative review a habit, you build a culture where quality is baked in from the start. Of course, good documentation is a huge help here. If you need a refresher, check out these technical documentation best practices.

Use BDD to Get Everyone on the Same Page

One of the most powerful tools for integrating testing into your daily workflow is Behavior-Driven Development (BDD). BDD uses a simple, structured language (usually Gherkin) to describe how the software should behave from a user's perspective.

I've always found that the biggest value of BDD isn't the testing automation—it's the conversation. It forces business folks, developers, and testers to use the same language, which eliminates a massive amount of confusion right up front.

A BDD scenario is written in a plain English format that anyone can understand:

Feature: User Login

Scenario: Successful login with valid credentials Given the user is on the login page When they enter a valid username and password And they click the "Login" button Then they should be redirected to their dashboard

The magic here is that this is both a clear requirement for the product owner and an executable test script that can be automated. This dual purpose is a massive win for efficiency. It's no surprise that 75% of technology teams now integrate continuous testing into their DevOps pipelines, leading to 40% faster release cycles.

As the software testing market barrels toward $94 billion by 2030, techniques like BDD are moving from "nice-to-have" to "must-have." By building these practices into your workflow, you’re no longer just testing for problems at the end; you're engineering for success from the very beginning.

Choosing the Right Tools for Requirements Validation

While human collaboration is the heart of getting requirements right, the right tools are the central nervous system. They keep everything connected, traceable, and organized. Trying to manage hundreds of requirements in a massive spreadsheet is a classic recipe for disaster—it's just asking for inconsistency and errors.

A good toolset doesn't just store requirements; it creates a living ecosystem. Every requirement should be linked to business goals, user stories, and eventually, test cases. This gives you a single source of truth, which is absolutely critical for maintaining clarity as projects inevitably evolve.

The Foundation: Requirements Management Platforms

You'll want to lean on industry-standard platforms built to handle the complexities of modern software development. Their entire purpose is to nail traceability, making sure every line of code or test case can be tracked back to its original requirement.

Here are three heavy hitters you'll see out in the wild:

- Jira: A favorite among Agile teams, Jira is fantastic at linking user stories and tasks directly to larger epics. It creates a clear line of sight from the initial idea all the way to implementation.

- Jama Connect: This one is purpose-built for highly complex or regulated systems. It offers incredibly robust traceability, risk management, and formal review workflows, which are non-negotiable in industries like medical or aerospace.

- Azure DevOps: Microsoft's all-in-one solution integrates requirements management directly into the entire development pipeline—from version control and CI/CD to the actual testing.

The best platform really depends on your team's methodology and scale. A nimble startup can get a ton of mileage out of Jira's flexibility. But a large enterprise building mission-critical hardware will probably need the rigorous controls of Jama Connect. And if security is a top concern, it's worth exploring specialized advanced web security management tools that can be integrated into your process.

The Rise of AI in Requirements Analysis

A powerful new player has entered the game: artificial intelligence. AI-powered tools can scan entire requirements documents in seconds, catching potential issues that a human might easily overlook. We're seeing this approach slash test case creation time by up to a staggering 80%.

AI doesn’t replace the critical thinking of a business analyst or tester. Think of it as an incredibly fast and tireless assistant. It flags potential ambiguities, contradictions, or missing details so your team can focus their brainpower on solving the right problems.

These tools are particularly good at a few key things:

- Detecting Ambiguity: They can instantly spot vague words like "fast," "user-friendly," or "robust" that are impossible to test without more specific criteria.

- Checking for Consistency: They ensure that a requirement defined in one document doesn’t contradict another one buried deep in a different spec.

- Generating Test Cases: They can create a solid baseline of positive and negative test cases directly from the requirement text, giving your QA team a massive head start.

By combining a solid management platform with the analytical power of AI, you can build a formidable defense against bad requirements. This leads to a smoother, faster, and ultimately more successful development cycle.

Common Questions About Requirements Testing

As teams start to get serious about validating requirements, a few questions always pop up. Getting these sorted out early on helps everyone move from just talking about it to actually doing it. It clarifies who does what and how this all fits into the bigger project lifecycle.

Let's clear up some of the most common points of confusion.

What Is the Difference Between Requirements Validation and Verification?

This is a big one. People often mix these two up, but they're fundamentally different checks. I like to use a simple house-building analogy.

- Validation asks, "Are we building the right house?" This is about making sure the requirements actually solve the real-world problem for the user and hit the business goals. It's the "what."

- Verification asks, "Are we building the house right?" This is about checking if the requirements themselves are solid—are they clear, consistent, complete, and something we can actually test against? This is the "how."

You can't have one without the other. Validation makes sure you're aimed at the correct destination, and verification ensures your map is accurate and readable.

Who Is Ultimately Responsible for Testing Requirements?

I see a lot of teams stumble over this. The answer? It’s a team sport. Sure, a Business Analyst or Product Owner might be the one writing everything down, but they can't—and shouldn't—validate requirements on their own.

True ownership is collaborative. A requirement is only truly "tested" when developers confirm it's technically possible, QA engineers agree it can be tested, and stakeholders sign off that it meets their needs. Pinning this on one person is a surefire way to miss something critical.

When everyone shares ownership, you break down those frustrating silos. It gets all the right perspectives in the room before a single line of code is written, which is exactly what a good "shift-left" approach is all about.

How Should We Handle Changing Requirements?

Let's be real: requirements are going to change. Change itself isn't the problem; it's how you manage it. You need a clear process, otherwise, you're just inviting chaos.

A solid change management process usually involves a few key steps:

- Assess the Impact: The first thing you do is pull out your traceability matrix. This should immediately show you which other requirements, test cases, and bits of the system will be affected.

- Evaluate the Trade-Off: Next, you have to weigh the pros and cons. What’s the business value of this change versus the extra cost and time it'll take? Is it a genuine must-have, or is it a nice-to-have that can wait?

- Document and Communicate: If the change gets the green light, update all the documentation. But even more importantly, tell the whole team what’s changing and why. Over-communication is your friend here.

If you’re working in an Agile environment, this flow is already part of the routine. Changes are handled through a prioritized backlog and discussed in each sprint. A good process turns a potential disruption into a calm, strategic decision.

At 42 Coffee Cups, we build high-performance web applications where quality is non-negotiable, right from the first conversation about requirements. Whether you need to launch an MVP quickly, tackle technical debt, or bring in expert Next.js and Python/Django developers, we build it right, from the start. Learn how we can help you achieve your development goals.